The computing landscape is undergoing a tectonic shift. In an era where data is the new oil, companies cannot afford to overlook the challenges and opportunities of massive data sets. With AI models growing exponentially in size and organizations relying on vast amounts of data for mission-critical decisions, efficient data storage and retrieval systems are paramount.

The crux of the matter? Efficiently storing and accessing these massive data sets will determine the pace of AI advancements, the efficacy of data-driven insights, and the overall competitiveness of businesses. Without a robust, scalable, and efficient storage system in place, even the most sophisticated AI models will falter.

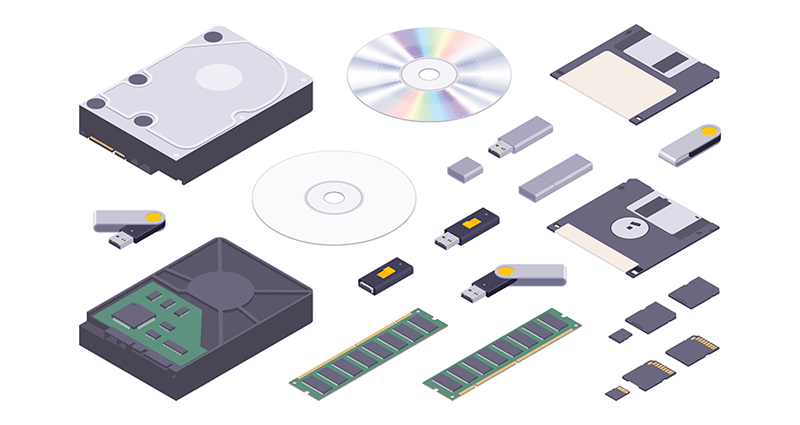

A Glimpse Into Storage Solutions: Pros and Cons

Your choice of storage solution isn’t merely about preference. It can impact the performance of your infrastructure, cost efficiency, and data security, among other things. Aligning the storage type with your use case is, therefore, a matter of serious thought. To help you with your choice, consider six popular storage solutions:

1. Traditional Relational Databases:

- Pros: Mature and well-understood relational databases offer structured storage, ACID transactions (properties intended to guarantee data validity), and a rich query language.

- Cons: They struggle with very large datasets and can become performance bottlenecks. Scaling horizontally (adding more servers) is also challenging due to their design.

- Use Case: E-commerce platforms use a relational database to manage inventory, track customer orders, and handle transactions.

2. Distributed File Systems (e.g., Hadoop’s HDFS, Google’s GFS):

- Pros: Built for massive datasets and horizontal scalability. They break data into chunks and distribute them across a cluster of machines, ensuring fault tolerance and redundancy.

- Cons: They might not offer the same transactional guarantees as traditional databases. Moreover, they usually work best with batch processing systems, which might not be ideal for real-time analytics.

- Use Case: A multinational company gathers log data from its various global operations. It uses a distributed file system to store and process these vast datasets, taking advantage of the system’s ability to scale across multiple servers and handle petabytes of information.

3. Object Storage (e.g., Amazon S3):

- Pros: Offers scalability and durability by treating data as objects (instead of files or blocks) and storing them in a flat namespace. Data retrieval is highly efficient, especially for unstructured data.

- Cons: Latencies can be higher than block storage or file systems, and there can be costs associated with frequent data access.

- Use Case: A media streaming service hosts millions of video and audio files. It uses object storage for its high scalability and durability, storing each media file as an object with associated metadata, making retrieval efficient.

4. NoSQL Databases (e.g., Cassandra, MongoDB):

- Pros: Designed for scalability, flexibility, and high throughput. They offer various data models (key-value, document, column-family, graph) to fit specific use cases.

- Cons: They often sacrifice ACID properties for scalability and performance, which might require additional applications to solve consistency and other data challenges.

- Use Case: Social media platforms need to store user profiles, their posts, and the complex web of connections between users. With its flexible schema and capability to handle unstructured data, a NoSQL database is well-suited for this dynamic and vast dataset.

5. In-memory Databases (e.g., Redis, Apache Ignite):

- Pros: Lightning-fast data access by storing data in RAM. Suitable for use cases demanding real-time analytics and high-speed transactions.

- Cons: Limited by the system’s memory, making it expensive. Also, data can be lost if not persisted correctly.

- Use Case: A financial institution offers real-time stock trading for its users. An in-memory database ensures that stock prices, user portfolios, and transaction histories are updated and accessed in real time, facilitating fast trading decisions.

6. GPU-Accelerated Databases (e.g., BlazingSQL, Kinetica):

- Pros: Designed to leverage the parallel processing capabilities of GPUs. It’s particularly useful for AI workloads that demand immense computational power.

- Cons: They might be overkill for simpler applications. Hardware requirements can also make them more expensive.

- Use Case: A healthcare research institute runs complex AI models to analyze medical imagery for signs of diseases. Using a GPU-accelerated database, they can process and analyze massive amounts of imaging data at record speeds, leveraging the parallel processing capabilities of GPUs.

Anticipating the Storage Needs of Tomorrow

Organizational data growth shows no sign of slowing down. To keep up with storage density and latency needs, we’re seeing advancements in technologies like multi-modal storage, quantum storage, and storage-class memory. These memory types enable previously unfathomable speeds and blur the line between memory and storage.

However, advanced storage solutions need a foundation of advanced hardware. We’ve partnered with Solidigm to provide our clients with the broadest NVMe SSD portfolio on the market — delivering form factors that fit every use case and computing environment.

Solidigm’s hardware provides a compelling blend of price to performance, ensuring users achieve a positive ROI faster. Their solutions are perfect for everything from complex AI algorithms to simpler data storage needs. Learn more at Equus.